Processing...

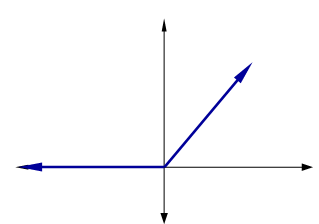

`PReLU(x) = {[ x if x > 0],[alpha*x if x <=0]}`

Enter a value for all fields

The PReLU Function calculator computes the Parametric Rectified Linear Unit (PReLU) value based on the input value and a hyperparameter.

INSTRUCTIONS: Enter the following:

- (x) A real number

- (α) Hyperparameter

PReLU(x): The calculator returns a real number

The Math / Science

The

formula for the PReLU Function is:

`ELU(x)={[ x if x > 0],[alpha*x if x <=0]}`

Where α is a hyperparameter (usually set to 1.0 by default) that controls the value to which ELUs saturate for negative net inputs.

Key Characteristics:

- Adaptivity: The model learns the optimal slope for negative inputs, which can lead to better performance.

- Solves dying ReLU problem more flexibly than Leaky ReLU.

- Can improve accuracy, especially in deep networks.

Considerations:

- Slightly increases model complexity due to the extra parameter.

- Needs careful regularization to avoid overfitting, especially if many parameters are introduced.

- PReLU was introduced in the paper "Delving Deep into Rectifiers" by Kaiming He et al. (2015), which also popularized the idea of He initialization for weights in ReLU-based networks.

Artificial Intelligence and Machine Learning Calculators

- Sigmoid Function

- Number of Nodes in a Hidden Layer of a Neural Network

- tanh (hyperbolic tangent)

- ReLU (Rectified Linear Unit)

- ELU (Exponential Linear Unit)

- PReLU (Parametric Rectified Linear Unit)

- Leaky ReLU

- Signum

- Matthews Correlation Coefficient